![[Translate to English:] [Translate to English:]](/assets/_processed_/c/c/csm_Headerbild_Chiffriermaschinen_Rainer_3889c3d7be.png)

IGGI Logo Photo: Deutsches Museum

Digital cultures of technology and knowledge

IGGI - The Engineering Spirit and Engineers of Mind

Exaggerated expectations and fears dominate the debates on ‘artificial intelligence’ (AI), both internationally and here in Germany. But what were the original goals and intended uses of AI research in West Germany? Where was AI research initially located within science?

Content

IGGI - The Engineering Spirit and Engineers of Mind: A History of AI in the Federal Republic of Germany

Funded by

The project (Förderkennzeichen 01IS19029) was funded by BMBF.

- Digital cultures of technology and knowledge

Edited by

PD Dr. Rudolf Seising

PI: DFG-Projekt „ArtViWo“; PI: IGGI

Dr. Helen Piel

BMBF Forschungsprojekt "Eine Geschichte der KI in der BRD"Teilprojekt "Künstliche Intelligenz und Kognitionswissenschaft"

Florian Müller

BMBF Forschungsprojekt "Eine Geschichte der KI in der BRD"Teilprojekt "Sprachverarbeitung"

Dr. Dinah Pfau

DFG-Projekt „ArtViWo“

Jakob Tschandl

BMBF Forschungsprojekt "Eine Geschichte der KI in der BRD"Teilprojekt "Expertensysteme"

Project description

The project asked how AI developed in West Germany in the context of the German science and innovation system and eventually emerged as an internationally successful part of computer science. In addition to analyzing archival and other source material, we conducted oral history interviews with eyewitnesses in order to contextualize West-German AI research within the recent history of science and technology.

“Artificial intelligence” (AI) currently dominates the debates

“Artificial intelligence” (AI) currently dominates the debates in science and technology, politics, economics, the arts and the media. But AI is more than self-driving cars, chess-playing computers or talking robots: AI is also a scientific discipline that hopes to imitate human intelligence with computers and even to construct machines with their own “intelligence”. The buzzword AI covers research on the design, processing and communication of information in and through machines. All of these skills are typically ascribed to humans.

IGGI: Ingenieur-Geist und Geistes-Ingenieure (the engineering spirit and engineers of mind)

The IGGI project researched the history of AI in the Federal Republic of Germany in order to further our understanding of the technologies known as “AI”. The acronym IGGI (the engineering spirit and engineers of mind) refers to a view held by early computer scientists, for whom programming did not result in a material product but rather in an abstract one aimed at problem solving. After establishing themselves as a scientific community in the mid-1970s, West-German AI researchers began to wonder if computers might be able to think. If so, then there would be no difference between the problem solving done by computer programs and that done by the human mind. Such a functional equivalence between computer and brain is at the core of the computer metaphor, a central tenet of cognitive science.

In the 1980s, several research strands began to differentiate themselves within AI. Among these were automated theorem proving, the processing and understanding of natural languages and images, and expert systems. Together with cognitive science, these strands present the five lenses through which we examined AI in our project.

A central methodological element was securing and analysing material in archives as well as papers held by researchers. We were also conducting oral history interviews with pioneers of AI to preserve their memories, archiving them as audio and video files.

You can see how we went about it in our online exhibition KI-Geschichte: Ein Making-Of (only in German).

Subprojects

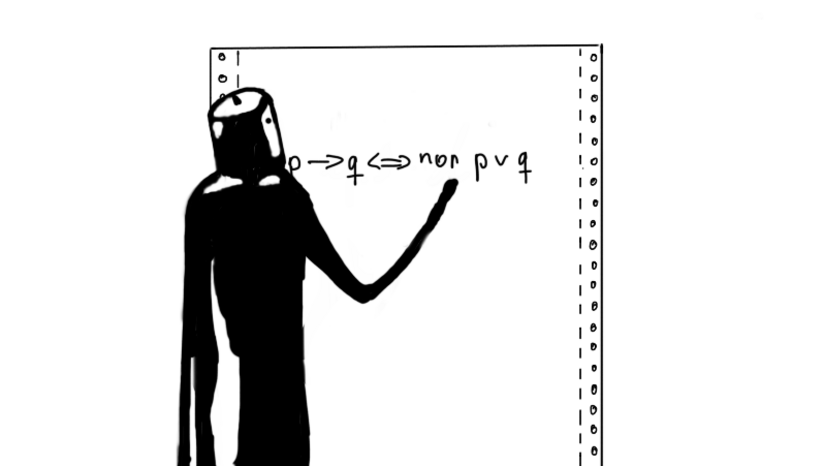

Photo: Illustration Dinah Pfau

Automated theorem proving

One of the oldest applications of AI is the computerised or computer-assisted proving of mathematical theorems.

For a mathematical theorem to be considered valid, it must be proven mathematically. Looking for a proof and carrying it out are two different tasks that mathematicians face. Such a proof is carried out via logical conclusions, by means of which the theorem is traced back to theorems that are already considered correct. When searching for a proof, mathematicians are often helped by experience and intuition, but they will often also have to try out and successively correct their proving approaches. Only then can they carry out a proof. But can computers search for and carry out mathematical proofs?

These questions are part of the founding narrative of AI research in the USA. It begins with a meeting organised by John McCarthy, Marvin Minsky, Claude Shannon and Nathaniel Rochester at Dartmouth College in 1956. There, Herbert Simon and Allen Newell presented their program "Logic Theorist", co-developed with Cliff Shaw, which proved some basic theorems from Russell and Whitehead's Principia Mathematica.

This project investigated the first developments in automated theorem proving in the Federal Republic of Germany. One of the first researchers in this field was Gerd Veenker, who was employed at the computing centre in Tübingen. He developed a "decision procedure for the propositional calculus of formal logic and its realisation in the computing machine" for his mathematics degree in the 1960s, as well as a "proof procedure for the predicate calculus" for his dissertation. In the 1970s, Veenker continued his research into automatic proofs as a professor in Bonn. The mathematicians Wolfgang Bibel of the Technical University in Munich and Jörg Siekmann of the University of Essex also worked in this field during that time. The latter had gone to England for his doctorate in Artificial Intelligence. When this new field of research became established in the Federal Republic of Germany, Siekmann conducted research in Karlsruhe and later in Kaiserslautern. The result was a large number of theorem prover systems, which were used in logical programming, programme synthesis and verification, and which are now part of the collection of the "Theorem Prover Museum" (https://theoremprover-museum.github.io/).

List of interviews with contemporary witnesses and archives visited

Photo: Illustration Dinah Pfau

Natural language processing

The research field of natural language processing investigates, how computers can process natural language. Well-known examples of applications include machine translation and automatic chat programs. This sub-project examined the historical development of machine language processing in Germany from the 1960s to the 1980s.

Natural language processing was established as a field of research in the context of linguistics in Germany in the early 1960s. The aim at this time was to use computers as a tool for linguistic research. In the mid-1970s, when researchers began to take interest in language-oriented AI research in the context of artificial intelligence research in Germany, the field changed. From this point onwards, the focus was no longer solely on linguistic research, but shifted towards more application-oriented research with the aim of enabling functioning human-machine communication. This change in research focus was supported by a change in research funding in the Federal Republic of Germany from the mid-1970s, which emphasized application-oriented research.

The historical research in this subproject focused on three case studies: the Hamburger Redepartner-Modell project at the University of Hamburg (1975-1981), where work was carried out on a dialog system, the Sonderforschungsbereich 100 Elektronische Sprachforschung at Saarland University (1973-1986), where research was carried out primarily on machine translation, and the work on machine language processing at the Institut für Deutsche Sprache (1964-1980), where, among other things, a question-answer system was developed.

List of interviews with contemporary witnesses and archives visited

Photo: Illustration Dinah Pfau

Image understanding

In 2020 this subproject began to focus on the topic of “image processing”. (It is not to be confused with computer graphics.) It quickly became clear that the machine-driven processing, manipulation and interpretation of images and text as information carriers was used in very different areas of science and society (medicine, physics, biology, but also communications engineering, remote sensing and many more). Research, too, was conducted under various terms (e.g. pattern recognition, image processing, image understanding). (It is not to be confused with the computer-controlled generation of images, as in the case of computer graphics.) Often, but by no means always, the technologies behind them were also referred to as “AI”.

But why did people even start evaluating images with machines? And with what means did they try to do this? To what extent has this changed the way we deal with these images and the impact they have? The project addressed questions like these using two exemplary case studies, both of which cover a period of around 20 years. The first case study analyzed the use of images in connection with the experimental practices of particle physics at DESY and examined their implications for approaches in computer science (around 1964-1980). The second case study addressed the technical and epistemic shifts in research at the intersection of communications engineering and cybernetics in Karlsruhe and examined their implications for later approaches to pattern recognition (around 1951-1970).

In being based on two micro-studies of different experimental systems, this project applied the approach of the science historian Hans-Jörg Rheinberger. By doing so, this study connects history of technology and history of science using Rheinberger's theory of the experimental system to analyze in detail the epistemic and technical conditions for the production of knowledge in the research on and the application of technical systems for the evaluation and interpretation of images. In doing so, it made an important contribution to the discussion about scientific knowledge production under the conditions of information and communication technologies, automation, and artificial intelligence.

List of interviews with contemporary witnesses and archives visited

As examples of research conducted at that time you can find the projects DARVIN and BILDFOLGEN by Prof. Dr. Hans-Hellmut Nagel here.

Photo: Illustration Dinah Pfau

Expert systems

This subproject investigated a first attempt at the commercial application of artificial intelligence.

The basic idea of the so-called expert systems was to store the knowledge of an expert, e.g. a doctor, in the form of if-then rules in a computer. A user would ask this system questions. The system would then use various search strategies and methods to search the knowledge base for answers to the user's questions. This is why expert systems were often referred to as knowledge-based systems.

The origin of this strand of AI research is traced back to the US system DENDRAL, which was developed at Stanford from 1965 onwards. In 1980, reports of a successful commercial application of an expert system in the USA attracted attention. When Japan focused on this technology in 1982 with the "Fifth Generation Computer Program", the USA and Europe responded with funding programs of their own. This included the West German government in Bonn. A veritable "hype" began around the promising new expert systems, which for many became the epitome of artificial intelligence at the time. Government funding encouraged big corporations to set up AI development departments. Large research institutions also focused on expert system technology. The interest of the general public in AI grew with the practical application of expert systems. However, when it became clear in the early 1990s that the Japanese "Fifth Generation Computer Program" would not achieve its ambitious goals and that commercially successful expert systems would remain the exception, the government and industry scaled back their investments.

The interrelationships between science, business, politics and the media in the attempt to help expert systems become the first commercially successful application of AI research were examined from a discourse theory perspective. In addition to technical feasibility, for the practical application of expert systems it was also crucial to convince the general public, business and politics of its benefits. The discursive strategies used in this process were described in this subproject.

List of interviews with contemporary witnesses and archives visited

Photo: Illustration Dinah Pfau

Artificial intelligence and cognitive science

Cognitive scientists want to know how cognitive processes (e.g. thinking, problem solving, or perception) work in natural and technical systems such as humans or computers. To that end a number of disciplines have joined forces, all sharing the premise that that cognition is a form of information processing.

The project asked how this research area developed in the Federal Republic of Germany and how it relates to research into artificial intelligence (AI). Both of these areas are rooted in similar developments of the 1940s to 1960s, such as computer technologies, computer science, cybernetics and cognitive psychology. They also use similar methods (cognitive modelling) and have a similar – depending on your point of view, the same – object of inquiry (natural and/or technical cognitive systems).

After first providing an overview of cognitive science’s roots in the US, the project’s focus was on the Federal Republic in the 1980s. This decade saw cognitive science grow increasingly visible in the West German science landscape and a network of cognitive scientists began to grow. At first, this took place primarily in smaller, local, and informally organised working groups and in individual projects. Starting in 1985, the German Research Foundation began founding three Priority Programmes. These connected interdisciplinary projects on cognitive science topics like “knowledge,” bringing together researchers from psychology, computer science, philosophy, neuroscience, and linguistics. Throughout the 1980s we also see people and topics having a place both in cognitive science and AI. Occasionally researchers argued that either cognitive science or AI was the overarching discipline that also included the other. Generally speaking, though, cognitive science can be said to be more interested in explanations, AI more in applications.

List of interviews with contemporary witnesses and archives visited

Events

Tandem Workshop in cooperation with Karlsruher Institut für Technologie (KIT) "Die frühe Geschichte der Künstlichen Intelligenz", First Part, Karlsruhe, 17.-18.09.2020:

The first workshop for the mutual presentation of the researched materials (manuscripts, artifacts, publications and eyewitness accounts) and joint discussion of fruitful research questions and interesting perspectives took place on Sept. 17 and 18 in Karlsruhe. The first workshop was organized by the project "Die Zukunft Zeichnen - Technische Bilder als Element historischer Technikzukünfte in der frühen Künstlichen Intelligenz" of the HEiKAexplore research bridge "Autonomous systems in the area of conflict between law, ethics, technology and culture" of the University of Heidelberg and the KIT.

Tandem Workshop in cooperation with Karlsruher Institut für Technologie (KIT) "Die frühe Geschichte der Künstlichen Intelligenz", Second Part, München, 20.-21.05.2021:

For the second workshop on the history of artificial intelligence texts circulated in advance and approaches were jointly developed. This interdisciplinary project not only addressed historical, but also explicitly philosophical as well as media and cultural theoretical approaches.

Montagskolloquim Deutsches Museum "Thinking Machines: History, Present and Future of Artificial Intelligence" April to July 2021:

The international and interdisciplinary lecture series "Thinking Machines: History, Present and Future of Artificial Intelligence" consisted of seven talks and provided a step towards an integrated perspective on Artificial Intelligence. The series in Summer Term 2021 has been jointly hosted by the Research Institute for the History of Science and Technology, Deutsches Museum, as part of their traditional "Montagskolloquium", the STS group at the European New School of Digital Studies, EUV, Munich Center for Technology in Society (MCTS), TUM, and the Philosophy of Computing group, ICFO, Warsaw University of Technology.

International Conference "AI in Flux – Histories and Perspectives on Artificial Intelligence", online, 29.11.-01.12.2021:

We gathered historians of science and technology, philosophers and computer scientists to discuss the transformations and circulations of the idea, science, and technology of artificial intelligence since it has left its original US-American context. Our 2 ½ day-online-conference consisted of two parts: a half day focusing on discussions with AI pioneers, and two days of presentations from interdisciplinary perspectives on histories of artificial intelligence.

Conference "Was war Künstliche Intelligenz? Konturen eines Forschungsfeldes 1975-2000 in Deutschland", Berlin, 15-16.05.2022

Invited to the three-day event were both protagonists of contemporary AI development in West and East Germany as well as researchers who contextualized the various subject areas of artificial intelligence research and development from a historical, cultural and media studies perspective. The "Fachgruppe Informatik-und Computergeschichte" within the German Informatics Society (GI) gave the impetus for this conference.

Conference "(Er)Zeugnisse des Digitalen – Unsichtbares sichtbar machen", Bonn, 02.-03.05.2022

During our conference "(Er)Zeugnisse des Digitalen", representatives from universities and museums discussed at the Deutsches Museum Bonn the challenges of dealing with software as an artefact in a museum context.

Conference documentation (only in German)

Annual Conference of the German Society for the History of Science, Medicine and Technology "Man – Machine – Mobility", Ingolstadt, 13-15.09.2023

The annual conference, organized by us together with the Deutsches Medizinhistorisches Museum Ingolstadt, addressed the historical dimensions of the topics "Man and Machine", "Machine and Mobility", "Mobility and Man" as well as their transitions and demarcations.

Closing Event of the IGGI Project, Munich, 15.12.2023

On December 31, 2023, the funding of the IGGI project by the Federal Ministry of Education and Research ended. We invited colleagues, friends, sponsors and contemporary witnesses to celebrate our project with us. The internationally renowned AI historian Stephanie Dick held a key note on the mathematical foundations of AI and Rudolf Seising and Helen Piel summed up our research of the past years, followed by a talk by librettist and playwright Georg Holzer on the artistic interpretation of history of science in the form of an opera on Alan Turing. The evening concluded with an art performance by the opera collective DIVA.

All program points of the evening are available here:

Introduction by Ulf Hashagen, Director of the Research Institute of the Deutsches Museum (only in German)

Lecture by Stephanie Dick: "Castles in the Sky": Reflections on the Mechanical Inexhaustibility of Mathematics

Lecture by Rudolf Seising and Helen Piel: Perspectives on the beginnings of German AI history (only in German)

Insights into the opera "Turing" with Georg Holzer (only in German)

Art performance by the opera collective DIVA and Acknowledgements

Publications and Presentations

List of all publications by the IGGI project team: To the PDF

In December 2023, an anthology with the first results of our research was published in the series "Deutsches Museum Studies". You can download it here (only in German): To the anthology

List of all presentations by the IGGI project team: To the PDF

In December 2020, the exhibition service of the Deutsches Museum produced two M*Vlog contributions with him on the topic of "The History of Artificial Intelligence in the Federal Republic of Germany" and published them in January 2021 (only in German):

![[Translate to English:]](/assets/_processed_/f/d/csm_Bild-1_Ephmeride-des-Kleinen-Planeten-Hecuba_824f1969c4.jpg)

![[Translate to English:] [Translate to English:]](/assets/_processed_/f/1/csm_Forschungsinstitut_Projekt_Algorithmische_Wissenskulturen_Header_HashagenSeising_743a1da746.jpg)

![[Translate to English:] [Translate to English:]](/assets/_processed_/9/4/csm_Forschungsinstitut_Projekt_Zuse_Headerbild_CD_57903_5bb36f91cf.jpg)

![[Translate to English:] [Translate to English:]](/assets/_processed_/0/6/csm_Logo_for_memory_RGB_600_a81aebaffa.jpg)